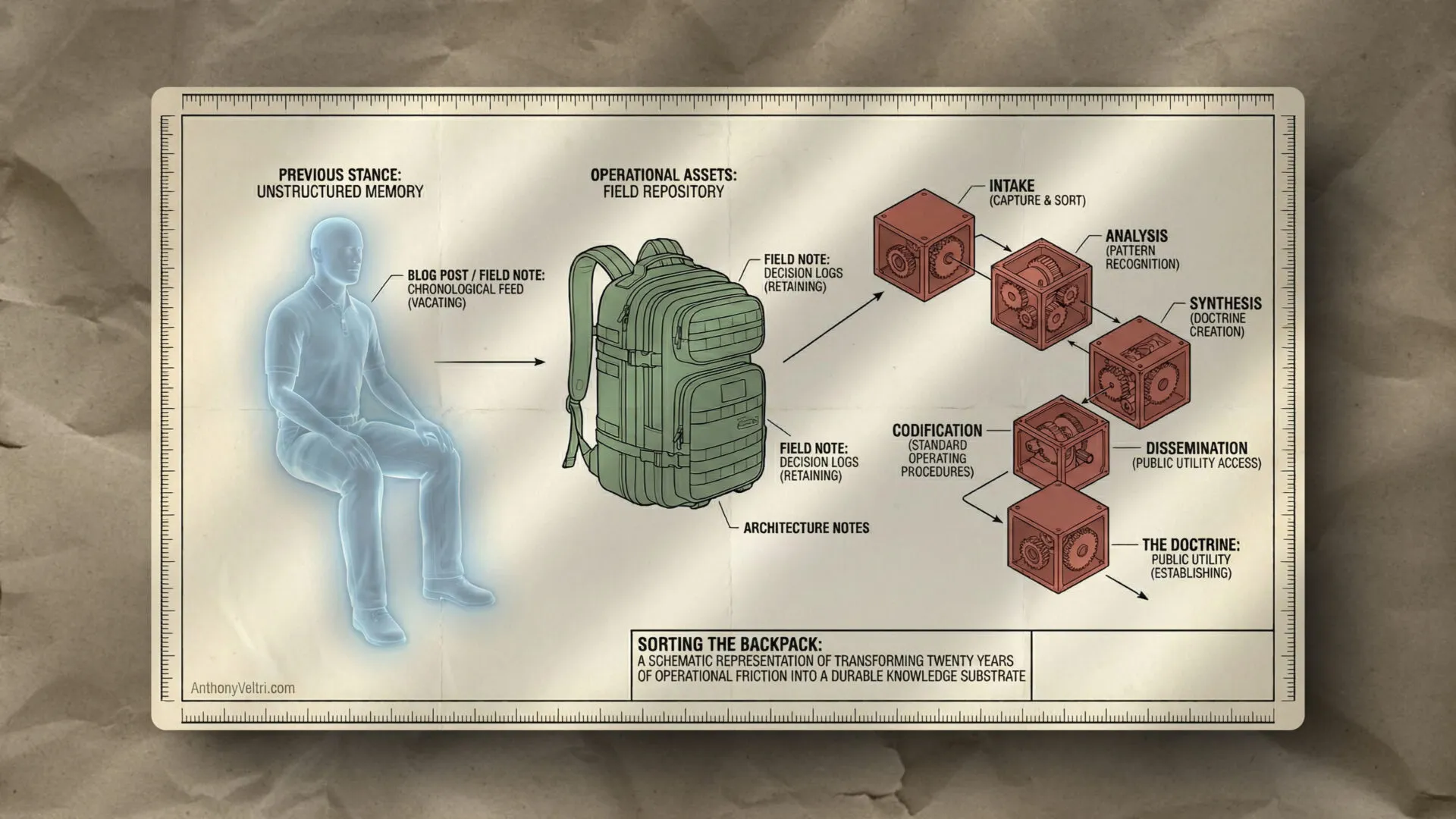

Field Note: Integration Debt vs Temporal Arbitrage

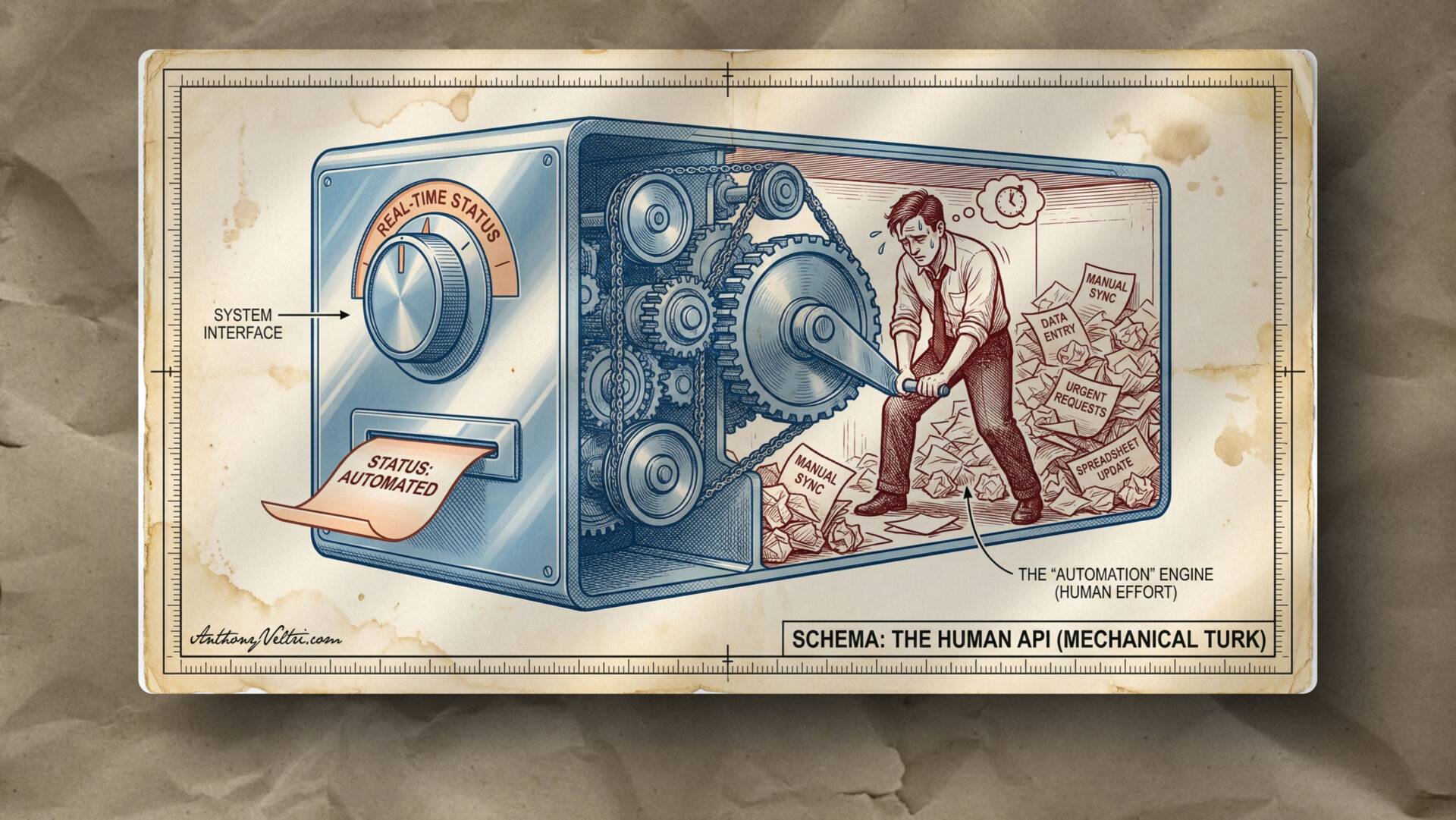

Pattern: Organizations apply integration thinking (centralize, standardize, control inputs) to problems requiring federation thinking (distribute, verify outcomes, govern at service layer). This creates governance theater while actual capability and control evaporate through shadow systems.

Context: Government and enterprise leaders facing AI adoption using governance frameworks designed for stable technology (mainframes, ERPs, data warehouses). Works well when technology changes slowly relative to organizational processes. Fails catastrophically when AI advances faster than standardization completes.

The Problem Statement

Forest Service leadership insists data must be structured and standardized before AI can assist with tasks like searching tribal implications across Federal Register, departmental handbooks, and policy guides. By the time standardization completes (18-24 months), AI will have advanced 3-4 generations past the architecture being built. Meanwhile, employees who need answers today are already using ChatGPT on personal devices, creating exactly the governance gap leadership was trying to prevent.

This isn’t isolated to AI. The same pattern appears in IT security (requiring device ownership when identity verification would suffice), network architecture (perimeter defense in cloud environments), and recruitment (agency-centric messaging competing with billion-dollar attention economy).

The pattern stems from treating federation problems as integration problems, optimizing for local control rather than system outcomes, and mistaking visible mechanisms for actual goals.

Old World vs New World Mental Models

What Leaders Believe They’re Achieving (Old World Integration Thinking):

Control and accountability

- “Central oversight prevents chaos and ensures we can explain decisions to Congress”

Quality assurance

- “Standardization means consistent, reliable outputs across the organization”

Compliance and auditability

- “Uniform systems create audit trails that survive legal challenge”

Resource efficiency

- “One enterprise system is cheaper than 50 different tools”

Risk mitigation

- “Moving slowly and carefully prevents catastrophic failures”

Fairness and equity

- “Everyone using the same system means no district gets unfair advantage”

What They’re Actually Getting:

Illusion of control

- Shadow IT proliferates because official systems don’t meet needs. Leaders lose visibility into actual operations.

Preparation theater

- Endless “getting ready” meetings with no value extraction. AI advances faster than preparation completes.

Compliance theater

- Checking standardization boxes while real risks (missing tribal consultation requirements) go unaddressed.

False economy

- High coordination overhead, long timelines, zero compound learning. Each employee wastes hours weekly on manual work AI could handle.

Risk concentration

- All eggs in one basket. Enterprise system takes 18-24 months to deploy. When it fails, entire organization is blocked.

Equality of poverty

- Everyone is equally unable to do their jobs efficiently. Fair, but nobody wins.

What New World Federation Thinking Delivers:

Distributed accountability (not chaos)

- Federation creates clear provenance. “AI found this in Federal Register section 42.3.7” is traceable.

Empirical quality (not standardization)

- Measure actual outcomes. Did the search catch relevant sections? That’s testable. Quality emerges from results, not input formatting.

Proportional governance (not uniform rules)

- Match oversight to stakes. Retrieval gets innovation lane governance (fast, federated). Decision support gets production lane governance (careful, validated).

Velocity as strategy (not recklessness)

- Extract 70% value today, compound learning over time. By month 6 you’ve learned what matters. Old approach is still in requirements phase.

Resilient exploration (not fragile integration)

- Many small federated experiments. Some fail fast and cheap. Survivors scale. Versus one big system that must work perfectly.

Earned authority (not positional control)

- Teams demonstrating capability with low-stakes retrieval earn trust for higher-stakes work. Authority flows to demonstrated competence.

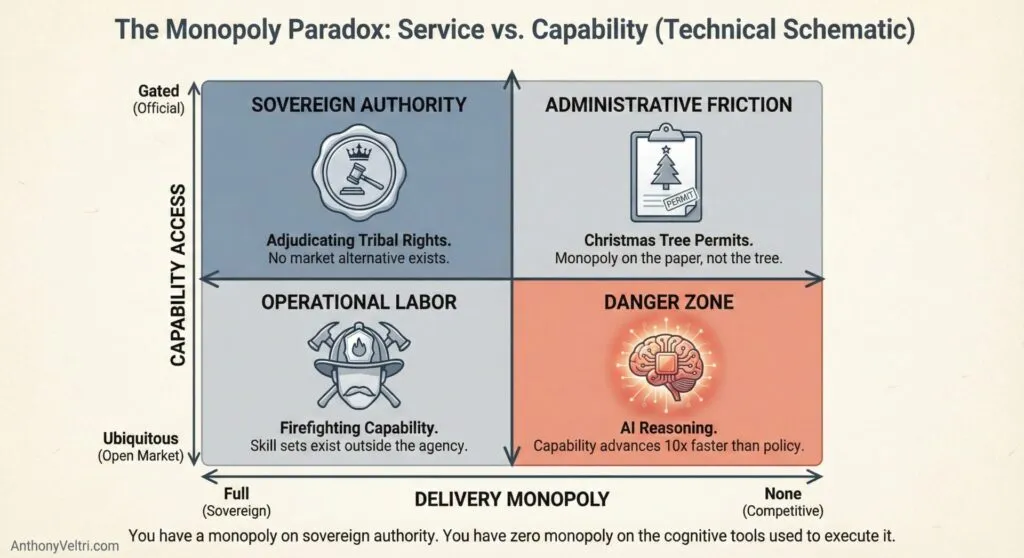

The Monopoly Paradox

Government leaders operate with a critical misunderstanding about what they have monopolies on. To understand the capability gap, we must distinguish between sovereign authorities and cognitive capabilities.

The Paradox Logic Table

| Quadrant | Title | Example | Rationale |

| Top-Left (Full Monopoly / Gated Access) | Sovereign Authority | Adjudicating Tribal Rights | Only the Federal Government can legally resolve these status claims. No app can “disrupt” this legal authority. |

| Top-Right (None / Gated Access) | Administrative Friction | Christmas Tree Permits | You hold the monopoly on the paper permit, but the “service” of obtaining a tree has market alternatives (private farms). |

| Bottom-Left (Full Monopoly / Ubiquitous Access) | Operational Labor | Wildland Firefighting | You manage the land, but the capability to fight fire is a skill set found in private sector and state agencies. |

| Bottom-Right (None / Ubiquitous Access) | Cognitive Capability | AI Reasoning / Search | AI advances the ability to process data (the “How”) and is available to everyone instantly. |

You have a monopoly on sovereign authority. Only you can issue a final adjudication on tribal rights or manage federal lands.

You do NOT have a monopoly on cognitive capability. You are competing with the open market for the tools used to execute that authority. Employees will use the most effective cognitive tools available (ChatGPT, Claude) regardless of whether official systems are ready.

Leaders assume a monopoly on authority creates a buffer against slow technology adoption. That worked for marketing transitions (2005-2020). Citizens needed permits regardless of website quality. Annoying, but mission still executed. It does not work for capability gaps. AI tools are free, instantly accessible, and dramatically more capable than the status quo.

Policy does not stop Shadow IT. It only stops Visible IT.

It doesn’t work for capability gaps. AI tools are free or cheap (ChatGPT, Claude), instantly accessible (no procurement or IT approval), dramatically more capable than status quo (not 10% better, 10x better), and invisible to leadership (perisonal devices, after hours).

An employee needing to search tribal implications won’t wait 18 months for official systems. They’ll paste documents into Claude tonight. You’ve lost governance exactly when you most needed it.

Why This Transition Is Unforgiving

Government leaders got away with slow adoption during marketing transition because they had monopolies on services. Citizens couldn’t get Christmas tree permits elsewhere, so bad websites were tolerable frustration, not mission failure.

That buffer doesn’t exist for AI. Employees don’t need permission to use ChatGPT. Shadow IT happens instantly and invisibly. The capability gap compounds daily, not yearly.

Marketing lag consequences:

- Annoyed citizens

- Harder recruiting

- Mission still executes (people need permits, firefighters get hired)

AI lag consequences:

- Shadow IT invisibly bypasses governance

- Capability gap grows exponentially, not linearly

- Official systems become legacy on arrival

- Loss of mission capacity (employees working around broken tools)

- Talent exodus (best people won’t tolerate decade-old capability)

The Velocity Mismatch Gets Worse Over Time:

Marketing evolution (slow, linear):

- Year 1: Some agencies get social media

- Year 5: Most agencies have Facebook pages

- Year 10: Everyone has moved online

- Gap between leaders and laggards: manageable

AI evolution (fast, exponential):

- Month 1: AI can search documents

- Month 6: AI can draft responses, analyze data, generate reports

- Month 12: AI can coordinate multi-step workflows autonomously

- Month 18: AI capabilities have advanced 3 generations past where planning started

If standardization takes 24 months, you’re optimizing for AI that’s four generations old by deployment. Employees who went around you have been compounding learning for two years while leadership was in requirements meetings.

You’re not competing for permits (you have the monopoly). You’re competing for:

- Operational capacity (your tools vs available tools)

- Talent retention (best people demand modern capability)

- Governance visibility (shadow IT vs official systems)

- Temporal relevance (18-month projects vs 6-month AI generations)

CALLOUT: –> The Standardization Trap

What Leaders Are Actually Hoping To Achieve

When government leaders order 18-24 month standardization projects before AI adoption, they’re pursuing entirely legitimate goals that have worked for decades:

Create Stable Foundation

- “If we standardize data formats now, every team can build on consistent infrastructure”

- “We won’t have to redo work when requirements change”

- “Future projects will move faster because foundation is solid”

Enable Scale and Reuse

- “Once data is standardized, we can deploy solutions across all districts”

- “What works in Region 1 can be replicated in Region 6”

- “We avoid each team solving the same problem differently”

Reduce Long-Term Costs

- “Yes, standardization takes 2 years, but we’ll save 10 years of maintenance headaches”

- “Paying upfront prevents technical debt downstream”

- “One clean system is cheaper than maintaining 50 fragmented ones”

Ensure Quality and Consistency

- “Standardized inputs mean predictable outputs”

- “We can validate once instead of validating 50 different formats”

- “Quality assurance becomes manageable at scale”

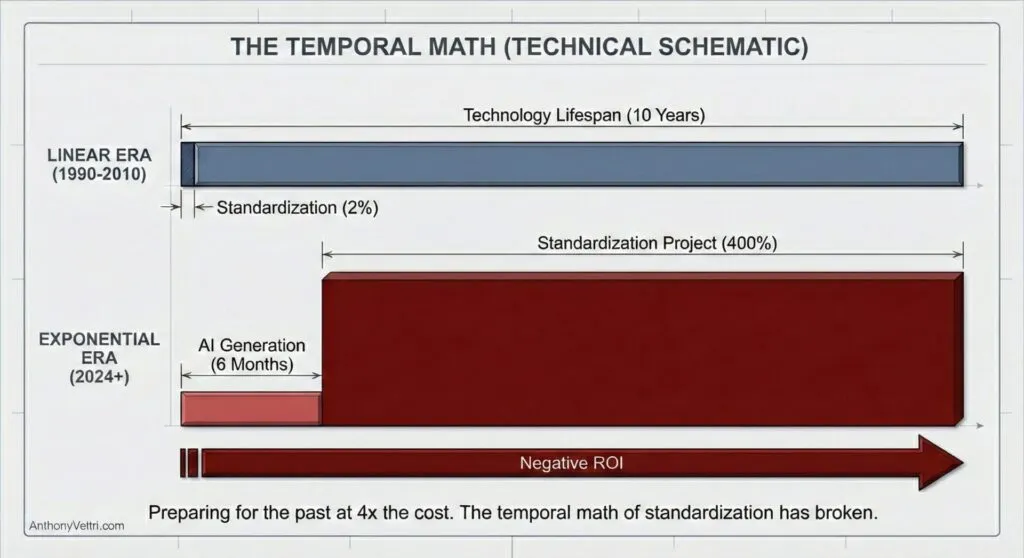

These goals are not wrong. They’re how you successfully deployed ERPs, data warehouses, and enterprise systems for 40 years (1980-2020). The strategy worked because technology was relatively stable. You could spend 3 years standardizing for an ERP that would run for 15 years. The standardization cost was amortized across more than a decade of use.

Why This Strategy Worked (Past Tense)

Mainframe Era (1960s-1980s):

- Technology generation: 10-15 years

- Standardization project: 2-3 years

- Payoff period: 12+ years

- Math: Works brilliantly

ERP/Data Warehouse Era (1990s-2010s):

- Technology generation: 5-10 years

- Standardization project: 18-24 months

- Payoff period: 8+ years

- Math: Still works well

Leaders who succeeded in these eras learned that “slow and careful” beats “fast and chaotic.” They’re right, given the technology landscape they operated in. Standardization was a genuine competitive advantage.

Why This Strategy Fails Now

AI Era (2023-present):

- Technology generation: 3-6 months

- Standardization project: 18-24 months

- Payoff period: ??? Unknown (likely incremental delivery vs. step-change)

- Math: Too noisy at current resolution. Obscured by local optima/minima (needs macro view)

Note: Unlike the previous eras where standardization delivered a clear “before/after” payoff, AI improvements may arrive incrementally rather than as waterfall-style delivery. The math likely still works, but the signal-to-noise ratio at monthly or quarterly resolution makes it impossible to distinguish genuine payoff from local optima and minima. From a 25-year perspective, the aggregate value will likely be obvious and the cost-benefit calculation clear. But at decision-making resolution (quarterly budgets, annual planning), the noise overwhelms the signal. We are still learning what temporal scale is required to see the pattern.

By the time standardization completes, you’ve crossed 4-6 AI generations. You’re not just slightly behind. You’ve optimized for technology that no longer exists.

The Edsel vs Electric Vehicle Gap

The Ford Edsel (1958) is famous for being obsolete on arrival after years of planning and development. But the Edsel was still a car with an internal combustion engine, four wheels, and a steering wheel. It competed in the same category as other 1958 vehicles. It failed because consumer preferences shifted (toward smaller, more efficient cars), not because the entire category changed.

The 24-month AI gap is worse. Much worse.

It’s not Edsel (1958 car) vs modern ICE car (2024 car with better features).

It’s closer to: Standardizing for horse-drawn carriages when automobiles are arriving.

Or: Standardizing for dial-up modem infrastructure when broadband is 12 months away.

Or more precisely: Standardizing for manual card catalogs when Google is emerging.

Here’s the actual gap in AI (Dec 2024 to Dec 2026 estimate):

December 2024:

- Claude/GPT-4 can search documents, answer questions, draft responses

- Needs structured prompts, specific instructions

- Mostly text-based, some image understanding

- Requires human oversight for reliability

December 2026 (projected based on current velocity):

- AI agents coordinate multi-step workflows autonomously

- Visual understanding reaches human parity across domains

- Reasoning capabilities enable novel problem-solving without examples

- Self-correction and uncertainty calibration dramatically improved

- Integration with systems of record becomes seamless

- Voice, video, and multimodal interaction becomes standard

Your standardization project assumed December 2024 capabilities. You’ve designed data formats, governance processes, and architecture for AI that needs structured inputs and heavy human oversight.

By deployment, AI will handle messy inputs natively, coordinate across heterogeneous sources autonomously, and operate at capability levels you didn’t account for in your requirements. Your careful preparation is optimized for technology that no longer exists.

It’s not like the Edsel being outdated by 1959 Chevrolets. It’s like planning for horse stables when the Model T is already in production.

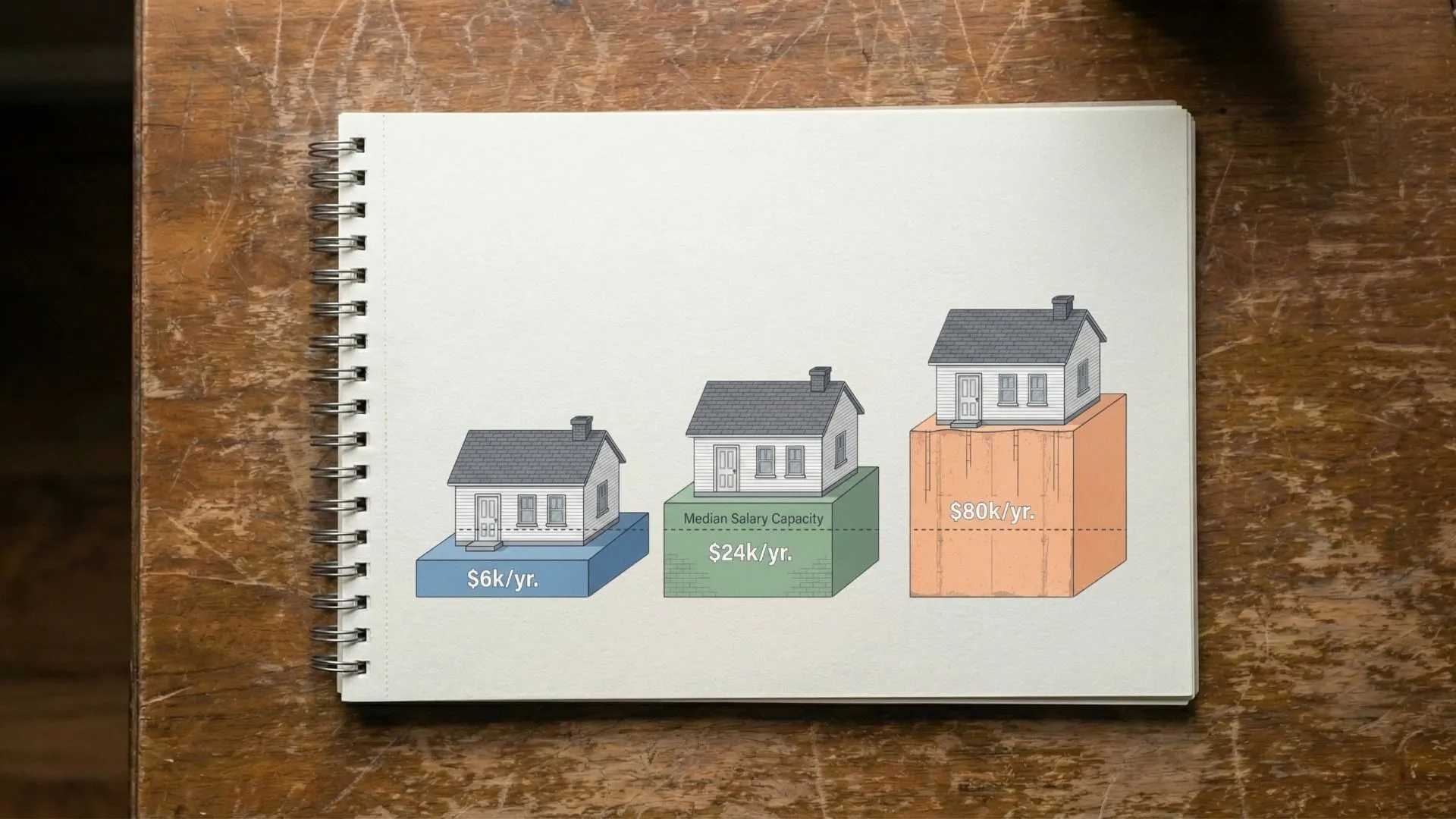

The Temporal Math

Old World (Technology Stable):

- Standardization: 24 months

- Technology generation: 120 months (10 years)

- Standardization cost: 2% of technology lifespan

- Result: Excellent ROI

New World (Technology Exponential):

- Standardization: 24 months

- Technology generation: 6 months

- Standardization cost: 400% of technology lifespan

- Result: Negative ROI (you’re preparing for 4 generations ago)

You can’t amortize 24 months of preparation across 6 months of relevance. The math doesn’t work. This is the logical anchor of why integration thinking fails for AI adoption. Not because integration is inherently wrong, but because the temporal economics have inverted.

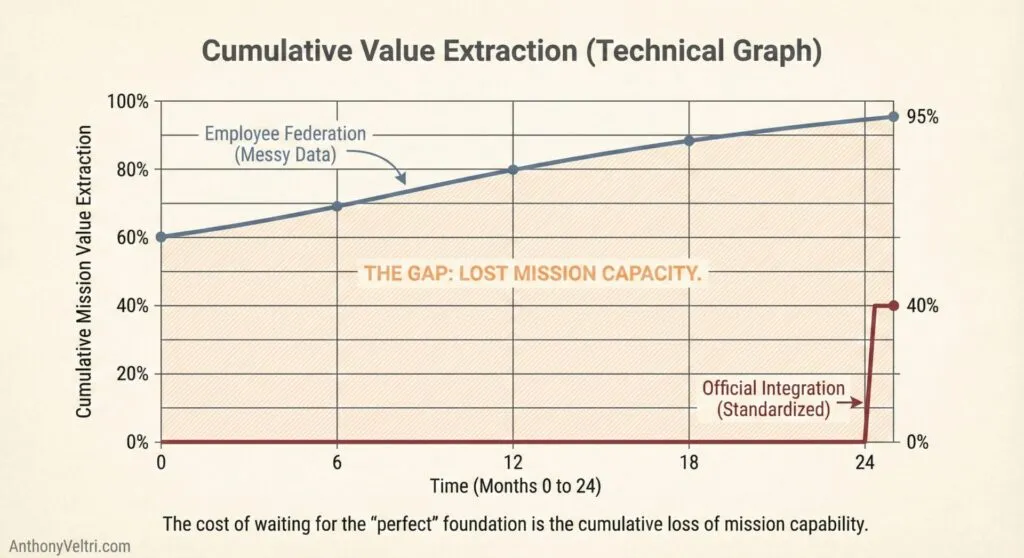

Meanwhile, Employees Extract Value Today

Month 0 (Present):

- Official approach: Begin standardization project, 0% value extraction

- Employee approach: Use ChatGPT on messy data, 60% value extraction

Month 6:

- Official approach: Still standardizing, 0% value extraction

- Employee approach: Learned what works, now at 75% value extraction, compounding knowledge

Month 12:

- Official approach: Still standardizing, 0% value extraction

- Employee approach: 85% value extraction, established workflows, trained colleagues

Month 24:

- Official approach: Deploy system optimized for AI from Month 0 (now 4 generations old), finally reaching 40% value extraction

- Employee approach: 95% value extraction using current-generation AI, 24 months of compounded learning

Employee who “violated policy” by not waiting has extracted 20+ months of value and learned what actually matters operationally. Official system arrives optimized for problems that no longer exist in ways that no longer matter.

What Makes AI Different

Previous Technology Transitions: You could see them coming with years of warning. Mainframe to client-server, on-premise to cloud, desktop to mobile. Each transition took 10-15 years from “emerging” to “dominant.” You had time to plan, standardize, and execute methodically.

AI Transition: Capabilities are emerging and becoming dominant in 12-18 month cycles. You don’t get years to prepare. By the time you’ve standardized, the capability landscape has fundamentally shifted.

Compound Effect: Each AI generation doesn’t just add features. It changes what’s possible architecturally.

- GPT-3 (2020): Impressive text generation, required careful prompting

- GPT-4 (2023): Reasoning, images, function calling, changed what you could build

- Claude 3.5 Sonnet (2024): Computer use, agentic workflows, artifact creation

- Next generation (2025): Anticipated to handle multi-step reasoning, improved factuality, tighter system integration

Each generation doesn’t just get “better.” It opens entirely new architectural possibilities. Standardizing for one generation means missing the next generation’s capabilities entirely.

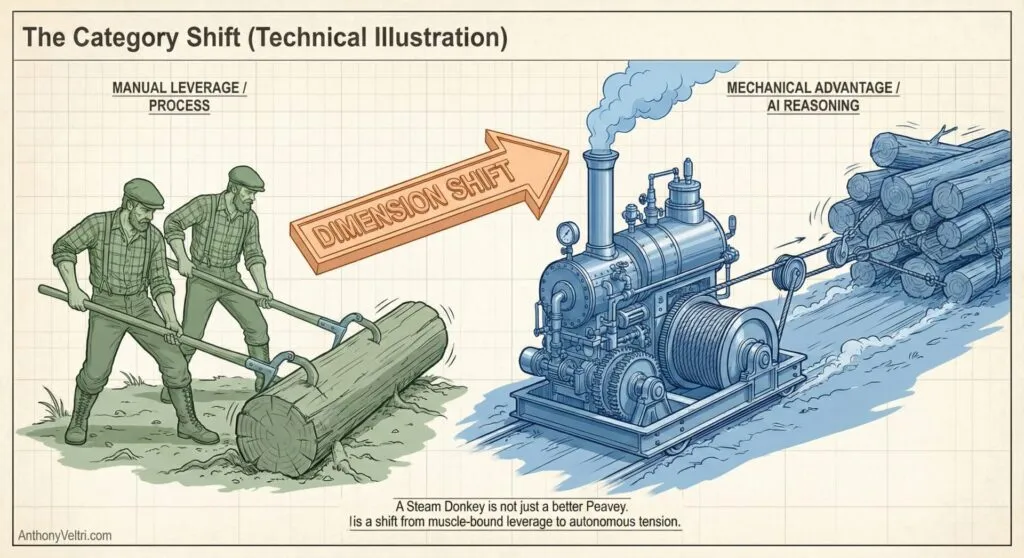

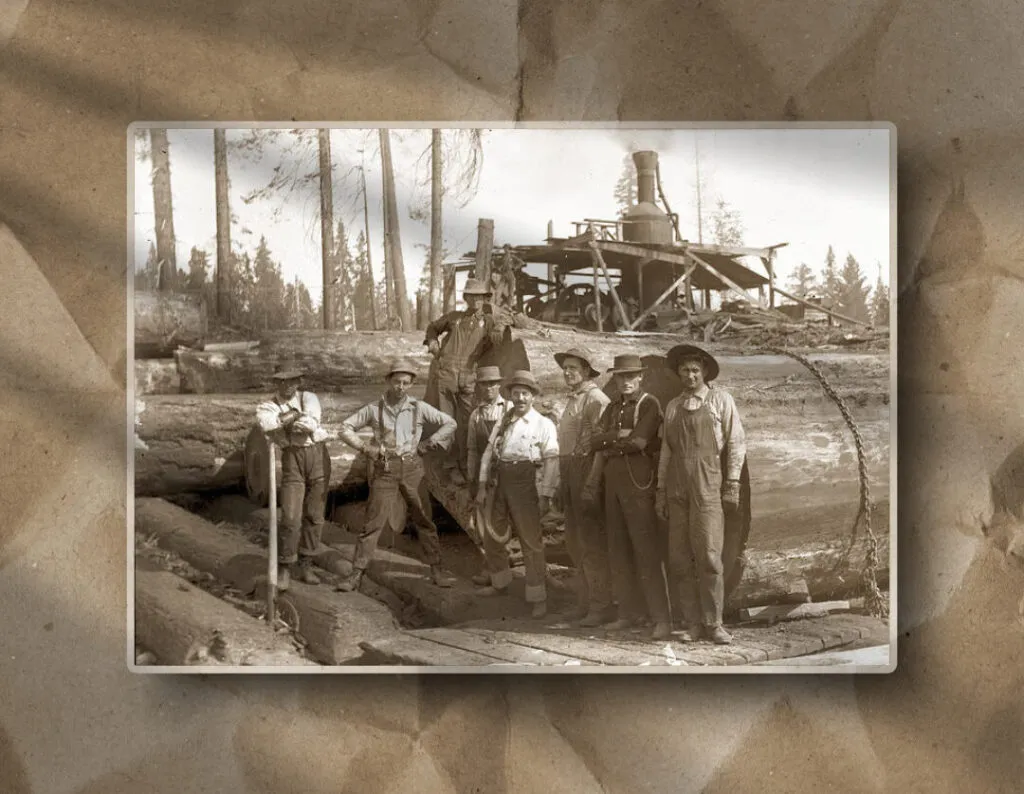

The Steam Donkey Principle:

The Steam Donkey didn’t just make loggers work harder or smarter; it changed the category of what was possible (shifting the work from muscle-bound leverage to mechanical advantage).

A Peavey (the traditional hand tool for rolling logs) still relies entirely on the operator’s physical strength to overcome friction. It is a linear tool for a linear task. In contrast, the Steam Donkey introduced cable yarding, which moved the work into a different domain entirely (one defined by engine power, autonomous tension, and mechanical velocity).

- Competing in the Wrong Dimension: Fighting AI with “more process” or “better standardization” is like training loggers to pry harder with a Peavey.

- The Muscle Trap: You are still competing in the dimension of human muscle and manual leverage.

- Category Failure: While you are perfecting your prying technique, the rest of the industry has moved into the era of engine-driven tension.

Organizations that treat AI like a “better sledgehammer” (or a better Peavey) are attempting to solve exponential problems with linear effort. By the time your standardization project is finished, the logs have already been yarded by a competitor who recognized the category shift.

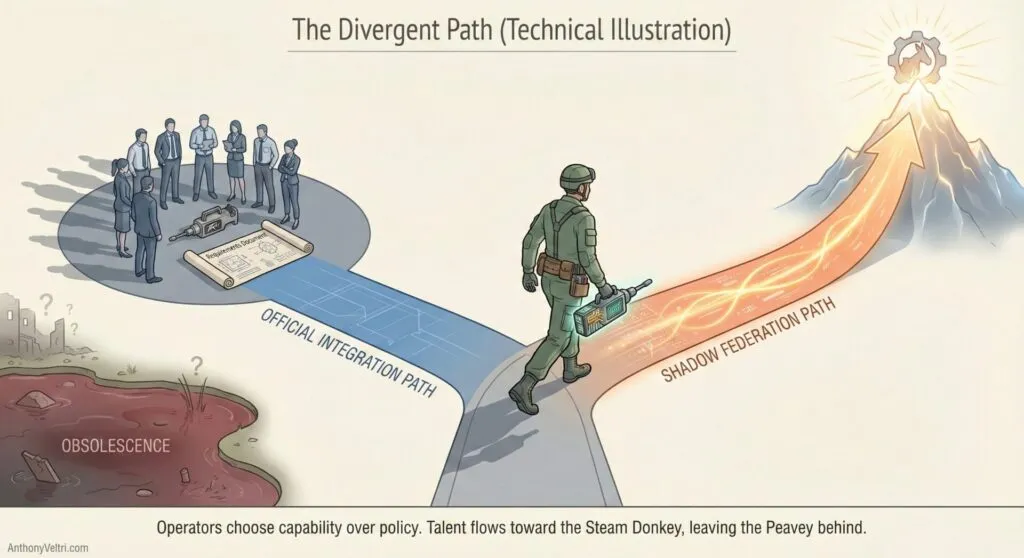

The Operator’s Dilemma

In a world defined by the rapid emergence of Steam Donkeys (AI), the most talented people are those who naturally gravitate toward mechanical advantage. When an organization insists on Peavey-only workflows (manual standardization and rigid integration thinking), it creates a specific, terminal friction for top-tier talent.

The Decay of Professional Market Value

High-performers (the “Operators” who maintain your mission capacity) do not leave because the work is difficult. They leave because the work is becoming obsolete. An Operator knows that if they spend two years prying with a Peavey while the rest of the world learns to rig cable yarding, their professional market value decays exponentially. They cannot afford to wait for a 24-month standardization project to finish when a technology generation lasts only six months.

Shadow IT as a Rational Mission Response

Your best people are the ones most likely to “violate policy” by using ChatGPT or Claude on personal devices. Within an integration mindset, this looks like rebellion or a security risk. Within a federation mindset, this is recognized as a rational mission response. The Operator is choosing the more capable tool to accomplish the goal (demonstrating professional responsibility by refusing to be limited by obsolete infrastructure).

The “Operator’s Dilemma” is straightforward: Stay and watch your skills become legacy (while struggling to meet mission goals) or move to an environment that prizes temporal arbitrage and federated velocity. If you do not provide the Steam Donkey, your best loggers will find someone who does.

The Strategic Mistake

Leaders are making a category error. They’re treating AI like previous enterprise technology:

“We’ll standardize first, then deploy at scale, then maintain for years.”

This worked for:

- ERPs (standardize for SAP, run for 15 years)

- Data warehouses (standardize schemas, use for decade)

- Enterprise service buses (standardize integrations, maintain for years)

It fails for AI because AI is not a stable platform you deploy once. It’s a rapidly evolving capability that advances whether you’re ready or not.

The correct mental model is not: “Deploy enterprise system for decade of use”

The correct mental model is: “Ride wave of advancing capability, updating continuously”

You can’t “finish” AI adoption. You can only position for continuous adaptation. Standardization projects assume you can reach a stable end-state. AI means there is no stable end-state.

What To Do Instead

Not: “Standardize everything, then deploy” But: “Extract value now with federated approach, standardize what survives contact with reality”

Not: “Wait 24 months for perfect foundation” But: “Learn for 3 months what actually matters, standardize those specific things in parallel with use”

Not: “One enterprise system for all use cases” But: “Innovation lane for exploration, production lane for critical systems, different timelines for different stakes”

Not: “Prevent all mistakes through careful preparation” But: “Make small mistakes fast, learn what matters, scale what works”

The goal isn’t “no standardization.” The goal is “standardize at the speed of learning, not the speed of committee consensus.”

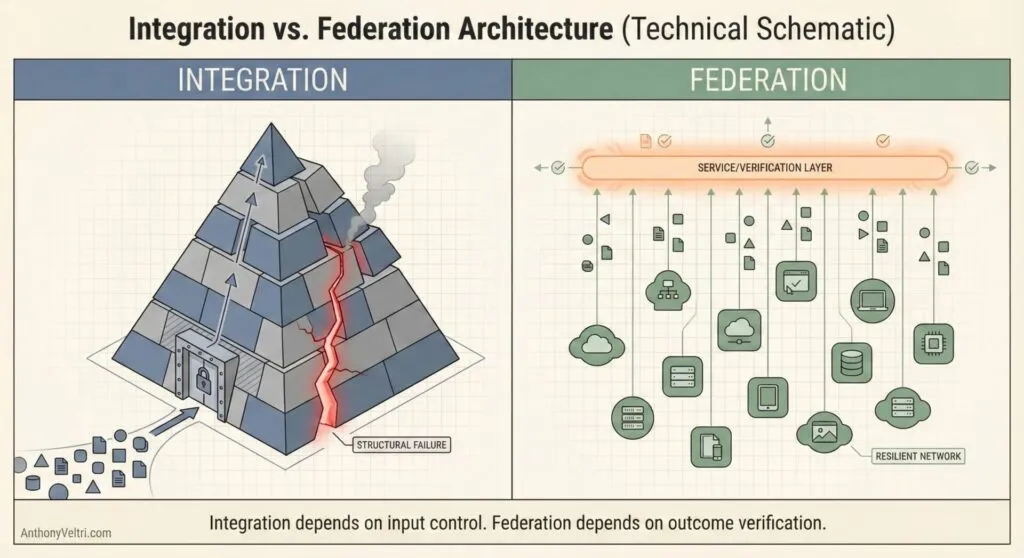

Integration vs Federation: The Core Pattern

The approach to AI adoption mirrors IT security approaches to device management. Both stem from integration mindset applied to federation problems.

Integration Thinking:

- Centralize systems

- Standardize inputs

- Control at perimeter

- Binary compliance (pass/fail)

- Optimize for visible control mechanisms

Federation Thinking:

- Distribute systems

- Verify outcomes

- Control at service layer

- Proportional governance (matched to risk)

- Optimize for actual goals achieved

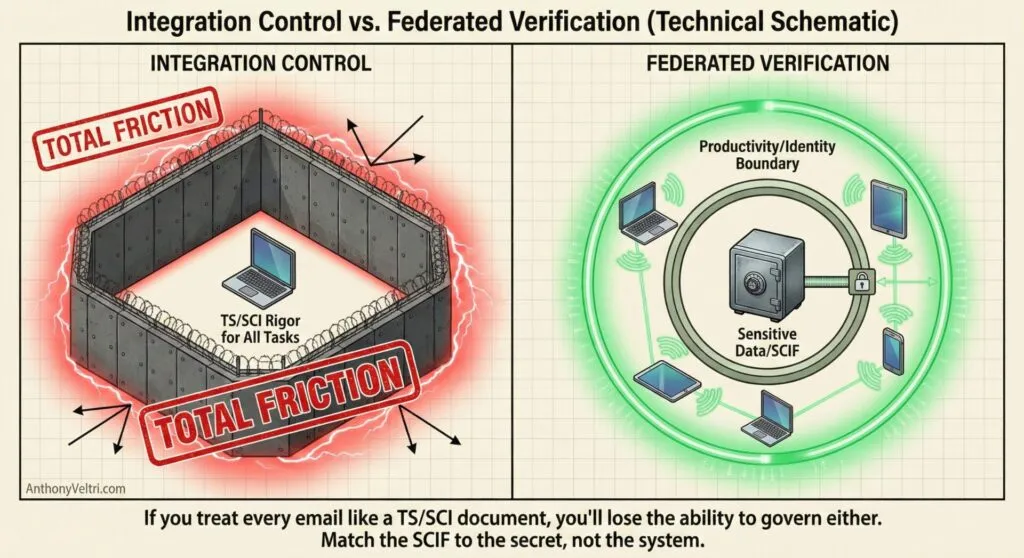

IT Security Example:

An EVP (who “lives” in outlook and maybe Teams, and doesn’t drill down deeper than that) within the M354 tennant buys a personal gaming laptop just to run Outlook because company-provided devices are inadequate (and because she wants to game). IT Security blocks it citing device policy. Result: shadow IT proliferates, security engineer frustrated, EVP frustrated, actual security worse.

What integration thinking produces:

- Device ownership as security boundary

- Binary enforcement (compliant device or nothing)

- Workarounds that bypass all governance

- Local optimization (security controls) destroying system outcomes (actual security)

What federation thinking produces:

- Identity and data as security boundary (zero trust)

- Conditional access policies at service layer (M365 tenant)

- Any compliant device can access (company, personal, contractor)

- Litigation hold, audit trails, compliance at tenant level

- Security engineer satisfied, EVP productive, actual security better

If the EVP only uses Microsoft 365 (Outlook, browser, Office apps), security boundary should sit at identity and data layers, not physical hardware. With proper conditional access policies, any compliant device works. The organization optimized for visible control (device ownership) rather than actual outcomes (data protection, compliance, productivity).

This exmple is the policy equivalent of treating every segment of a network as TS/SCI and requiring a SCIF for every interaction. While accessing top-secret data certainly requires specific devices, physical locations, and entitlements, applying that same cross-cutting rigor to unclassified email is a category error. When organizations refuse to distinguish between a high-stakes core and a low-stakes edge, they don’t achieve ‘Total Security’; they simply force their best people to build workarounds in the dark.

The same organization requiring device ownership for Outlook will require data standardization before AI search. Both stem from integration mindset: control the inputs rather than govern the outcomes.

Case Resolution: Applying Federation Thinking to Tribal Implications Search

Let’s revisit the Forest Service tribal implications search through the federation vs integration framework. This demonstrates how the theory translates to operational practice.

The Integration Approach (What’s Actually Happening):

Leadership requires structured, standardized data before AI can assist. The logic goes:

- Federal Register, handbooks, and guides exist in different formats

- AI “needs” consistent data structure to work properly

- Therefore: 18-24 month standardization project to unify formats

- Only after standardization can AI perform searches

- Result: Zero value extraction during preparation, AI advances 3-4 generations past planned architecture, employees use ChatGPT anyway (shadow IT), governance gap leadership was trying to prevent

The Federation Approach (What Should Happen):

Query across heterogeneous sources as they exist today. The logic goes:

- Federal Register, handbooks, and guides exist in different formats

- Modern AI handles format translation at query time natively

- Therefore: Define quality bar for search results (takes 1 week)

- AI performs federated search across sources immediately

- Result: Extract 70% value immediately, refine as AI improves, measure actual outcomes (did it find relevant sections?), visible governance with proportional oversight

Quality Definition (10 Minutes, Not 6 Months):

For tribal implications search:

- “Find sections where tribal consultation might be required”

- “Acceptable: catches 80% of relevant sections, 20% false positives OK”

- “Fatal error: none, human reviews results anyway”

- “Provenance: citation to specific document and section”

That’s enough to start. You learn what refinements matter through operational use, not theoretical planning.

The Provenance Layer:

For retrieval tasks (finding tribal implications), provenance means citation (where did you find this?), not justification (why did you conclude this?). That’s traceable without requiring uniform data formats.

When AI returns: “Federal Register 87 FR 12345, Section 4.2.3 discusses consultation requirements for projects affecting traditional cultural properties,” that’s sufficient provenance for the human reviewer to verify. You don’t need standardized input formats to achieve this. You need governance at the service layer (what claims can the system make, what evidence is required, how do we verify).

This is federation thinking applied operationally. The same pattern works for IT security (identity verification rather than device ownership), network security (zero trust rather than perimeter defense), and any domain where you’re tempted to control inputs when you should be governing outcomes.

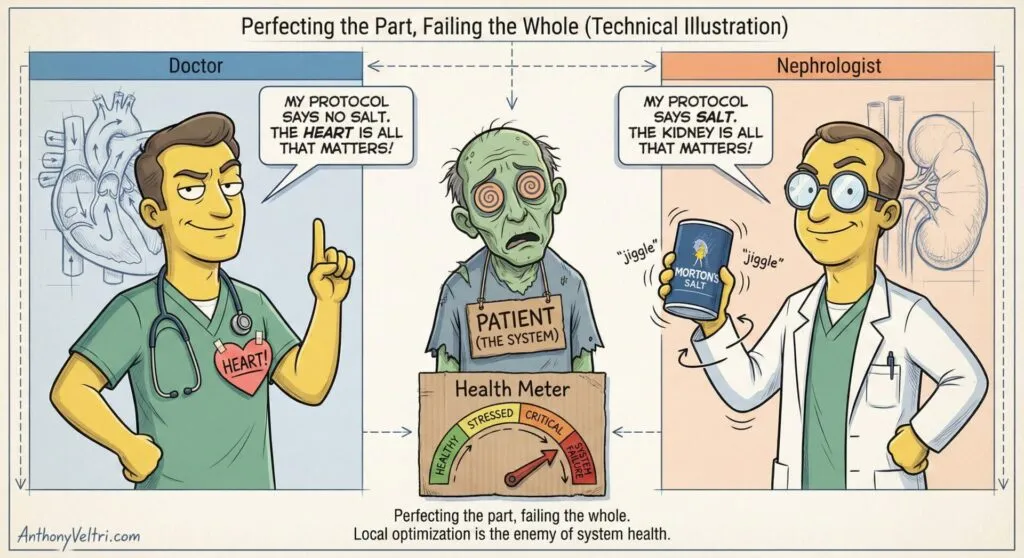

Local Optima vs System Optima

The cardiologist and nephrologist fighting over salt demonstrate local optimization destroying system health. Each specialist optimizes for their domain (heart health, kidney health) without considering the patient’s overall wellbeing.

Organizations do this constantly:

Security optimizes for device control (local)

- Productivity suffers

- Shadow IT emerges

- Net security decreases while “compliance” increases (system failure)

IT optimizes for data standardization (local)

- Mission capability delayed

- Employees use ChatGPT anyway

- Net governance decreases while “preparation” continues (system failure)

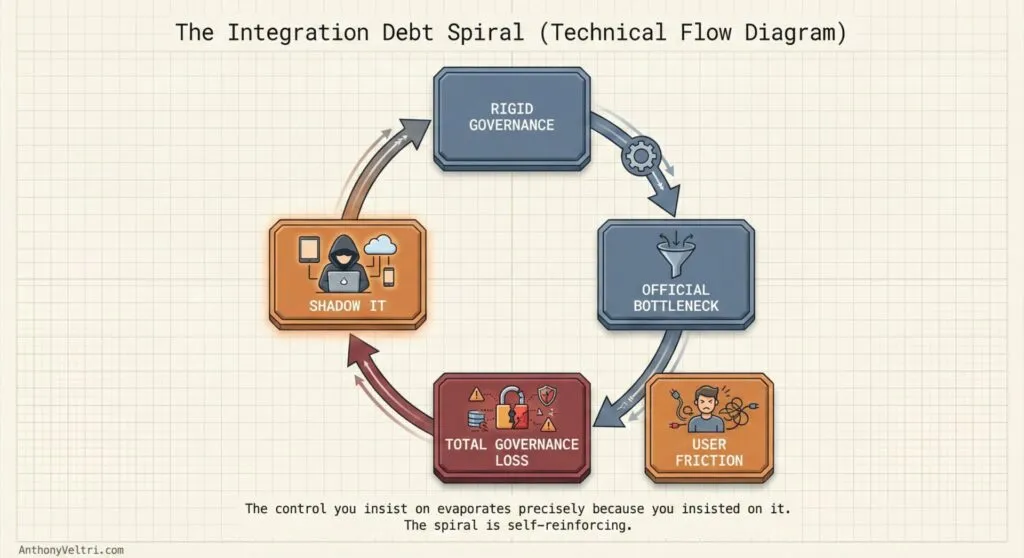

The Integration Debt Trap:

When you force federation problems through integration governance:

- Official systems become bottlenecks

- Capable people route around bottlenecks

- Shadow systems proliferate without governance

- Organization loses visibility into actual operations

- The control you were protecting evaporates precisely because you insisted on it

The EVP using a personal laptop for Outlook is identical to the Forest Service employee using ChatGPT for tribal implications search. Both are rational responses to systems optimized for control rather than outcomes.

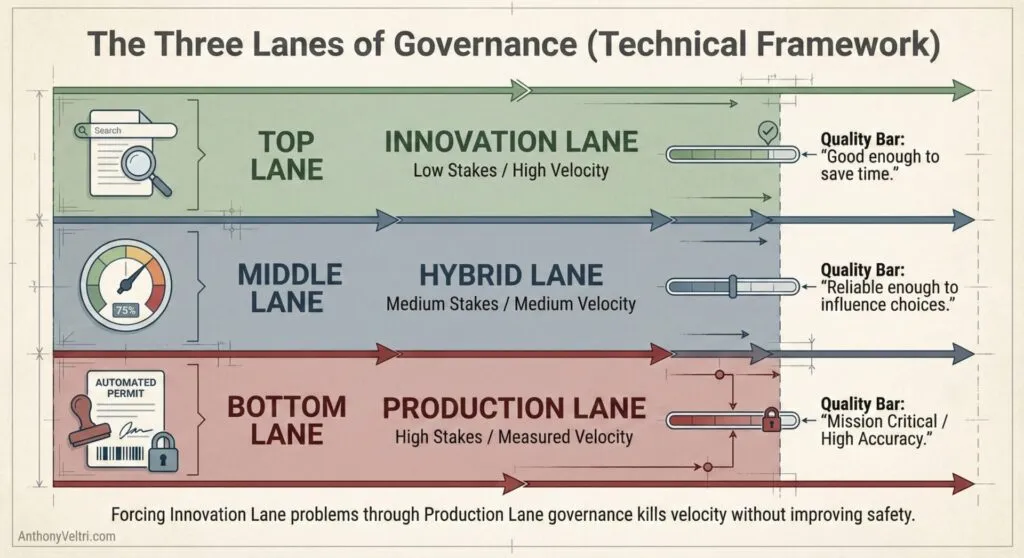

The Governance Mismatch

Organizations force low-stakes AI exploration through high-stakes governance, creating mismatches that kill velocity without improving safety.

Whether you utilize a two-lane system (Stability vs. Adaptation) or the three-lane framework shown above, the logic remains the same: you must stop forcing high-velocity innovation through the friction of production-grade governance.

Three Lanes of Activity:

Retrieval/Augmentation (Innovation Lane):

- Stakes: Low (human reviews results anyway)

- Velocity: High (immediate value extraction)

- Governance: Federated, proportional oversight

- Quality bar: “Good enough to save time”

- Example: Tribal implications search across documents

Decision Support (Hybrid Lane):

- Stakes: Medium (informs human decisions)

- Velocity: Medium (validate patterns, refine over time)

- Governance: Measured, evidence-based

- Quality bar: “Reliable enough to influence choices”

- Example: Fire risk scores for resource allocation

Autonomous Action (Production Lane):

- Stakes: High (system acts without human review)

- Velocity: Low (extensive validation required)

- Governance: Rigorous, auditable

- Quality bar: “Correct enough for mission-critical use”

- Example: Automated permit approvals, compliance decisions

The Failure Mode:

Organizations apply Production Lane governance to Innovation Lane problems:

- Tribal implications search forced through 24-month standardization

- Device policy treating Outlook access as mission-critical system

- Enterprise architecture reviews for document retrieval tools

Result: Innovation Lane generates zero value while waiting for permission, employees route around governance, shadow IT proliferates, actual control decreases.

Temporal Arbitrage vs Integration Debt

Old World Assumption:

- Technology stable, standardization valuable, integration wins

- True for mainframes, ERPs, data warehouses (1980-2010)

- Standardization effort paid off because technology changed slowly

New World Reality:

- AI advances faster than standardization completes

- Preparation work becomes obsolete before it ships

- Temporal arbitrage: extract value now while tech advances, or extract zero value never

What Leaders Should Ask Instead

Not: “How do we standardize data for AI?” But: “How do we extract value from data as it exists today?”

Not: “How do we control AI use centrally?” But: “How do we govern AI proportional to decision stakes?”

Not: “How do we ensure everyone uses the same system?” But: “How do we ensure everyone can accomplish their mission?”

Not: “How do we prevent any AI mistakes?” But: “How do we learn from small mistakes before they become big ones?”

Not: “How do we maintain device ownership for security?” But: “How do we achieve security goals (data protection, audit trails, compliance) at the appropriate layer?”

Resolution Framework

Match Governance to Stakes:

High risk (autonomous decisions, financial transactions, PII access):

- Tight control appropriate

- Extensive validation required

- Formal change management

- Audit trails, compliance documentation

Medium risk (decision support, analysis, structured queries):

- Conditional access, proportional oversight

- Measure actual outcomes

- Iterate based on results

- Balance velocity with validation

Low risk (retrieval, search, productivity tools):

- Verify identity, protect data

- Allow tool flexibility

- Fast feedback loops

- Innovation lane governance

Choose Federation Over Integration When:

- Multiple heterogeneous sources exist (documents, systems, formats)

- Speed of change exceeds speed of standardization

- Users have legitimate need for tool choice

- Actual goal (security, governance, quality) achievable at service layer rather than input layer

- Cost of delayed value exceeds cost of heterogeneity

Define Minimum Viable Quality:

The quality definition takes 10 minutes, not 6 months of data formatting. Gets value today while learning what refinements actually matter through operational contact with reality.

Build for Adaptation:

Don’t freeze definitions and optimize for stability. Build systems that accommodate changing definitions of quality and correctness as you learn.

- Define minimum viable quality now

- Extract value immediately

- Measure actual outcomes

- Refine based on operational learning

- Update as AI capabilities advance

This works operationally because solo integrated operators can iterate end-to-end without multi-actor coordination overhead. Federation thinking enables this. Integration thinking blocks it.

Test Questions for Leaders

Governance Visibility: “How many of your employees are already using ChatGPT or Claude for work tasks on personal devices?”

If you don’t know the answer, you’ve already lost governance. If the answer is “many,” your slow preparation strategy is creating exactly the chaos you’re trying to prevent.

Temporal Arbitrage: “Can individual contributors extract 70% value this quarter using messy data and federated AI, while central IT is still in month 3 of a 24-month standardization project?”

If yes, you’re paying opportunity cost daily while preparing for perfection that will be obsolete on arrival.

Integration vs Federation: “Are we treating this as an integration problem (centralize, standardize inputs) or a federation problem (distribute, verify outcomes)?”

Most AI adoption failures stem from forcing federation problems through integration governance.

Local vs System Optimization: “Are we optimizing for visible control mechanisms (device ownership, data standardization) or actual goals (security, quality, mission capability)?”

If the mechanism has become more important than the goal, you’re in local optima destroying system outcomes.

Proportional Governance: “Are we applying production lane governance to innovation lane problems?”

Forcing low-stakes retrieval through high-stakes decision support governance kills velocity without improving safety.

Key Insights

- The monopoly on services you provide creates no buffer against the capability gap in how you provide them. Employees will use better tools regardless of policy. Policy does not stop Shadow IT. It only stops Visible IT.

- Integration brittleness stems from multi-actor dependency, not integration itself. When operating solo with complete end-to-end capability, integration becomes highly resilient. When coordinating across organizational boundaries, federation wins.

- For retrieval and augmentation tasks, provenance means citation (where did you find this?), not justification (why did you conclude this?). These require different architectures and governance models.

- AI advancement rate exceeds organizational standardization rate. The temporal math is decisive: you can’t amortize 24 months of preparation across 6 months of technology relevance. This results in 400% cost overrun measured against technology lifespan.

- Organizations consistently choose integration theater (visible control mechanisms) over federation reality (actual outcomes achieved through distributed systems with appropriate governance).

- The control you insist on through integration thinking evaporates precisely because you insisted on it. Shadow IT emerges when official systems can’t meet mission needs.

- Standardization projects optimized for stable technology (ERPs, data warehouses) fail when applied to exponentially advancing technology (AI). Not because standardization is wrong, but because the temporal economics have inverted.

Operational Provenance

These patterns emerged from:

- 20+ years federal experience (DHS enterprise architecture, disaster response, wildland fire)

- Forest Service observations of AI adoption blockers (2024-present)

- Recognition-Primed Decision Making research and application (Gary Klein, Gordon Graham)

- Two-lane architecture framework (stable/adaptive lanes)

- Federation vs integration decision frameworks

Not theoretical. These are operational patterns observed across government and enterprise contexts where integration thinking creates the problems it’s designed to prevent.

Closing FIELD NOTE: The Steam Donkey and the 400% Cost of the Past

Location: The Interface Failure Zone (and the Pacific Northwest, circa 1910)

Status: Category Shift in Progress

There is a specific kind of weariness that comes from prying at a massive problem with the wrong tool. You see it in the eyes of the 19th-century logging crew, men who spent their lives mastering the Peavey and other hand tools to roll logs that were just at the edge of human physical capacity. They were perfecting a linear skill in an era that was about to be defined by exponential tension.

Today, we are repeating this pattern in the digital “slash”. As AI advances faster than our ability to standardize it, we are training our best people (our Operators) to “pry harder” with manual integration projects and rigid governance. We are applying 24-month standardization timelines to 6-month technology generations, resulting in a 400% cost measured against the relevance of the tool itself.

This note is for the leaders who realize that their monopoly on sovereign authority provides no buffer against the capability gap of how they execute it. If you treat every email like a TS/SCI document and every AI search like a multi-year ERP deployment, you don’t achieve security… you simply force your best loggers to find a different forest.

Last Updated on December 24, 2025