Field Note: The Symbol Is Not the Signal

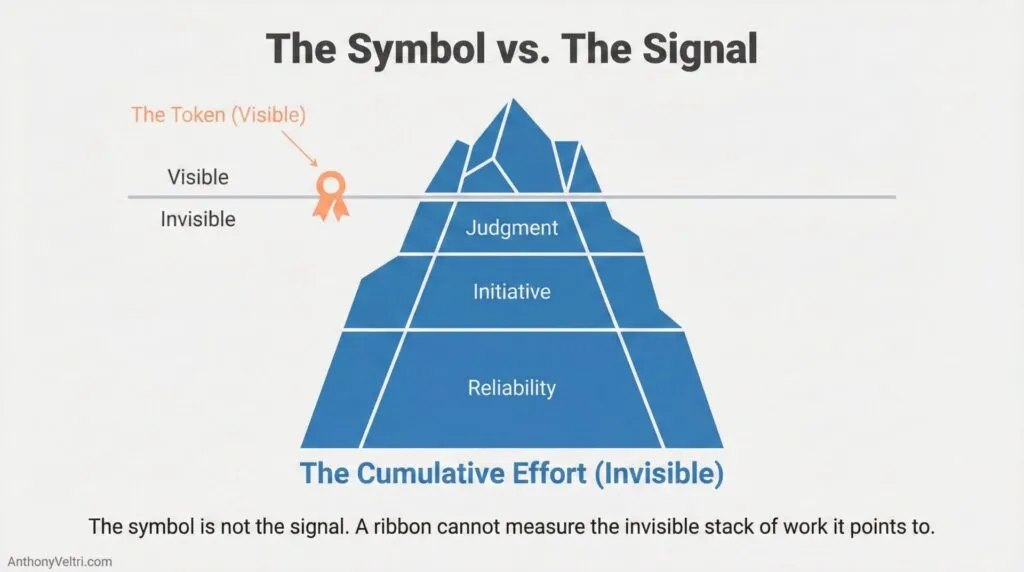

A ribbon cannot measure the invisible stack of work it points to.

Someone asked me when I was proud to see a colleague or friend recognized.

I thought for a while. I did not choose the biggest award I have ever witnessed. I chose a moment where the token was trivial compared to the signal behind it.

That difference has made me appreciate people more accurately. It has also exposed weak interfaces in systems that quietly fail the people doing the real work.

The symbol is not the signal.

What the token hides

The award itself was not impressive. A ribbon. A belt buckle. A coaster set. Something you could lose in a drawer.

That is why it stuck. The symbol was trivial compared to the cumulative effort required to earn the moment it represented.

People like this are rarely competing for recognition. They are just being consistent. And consistent contributors are easy to ignore because they do not create drama, friction, or noise.

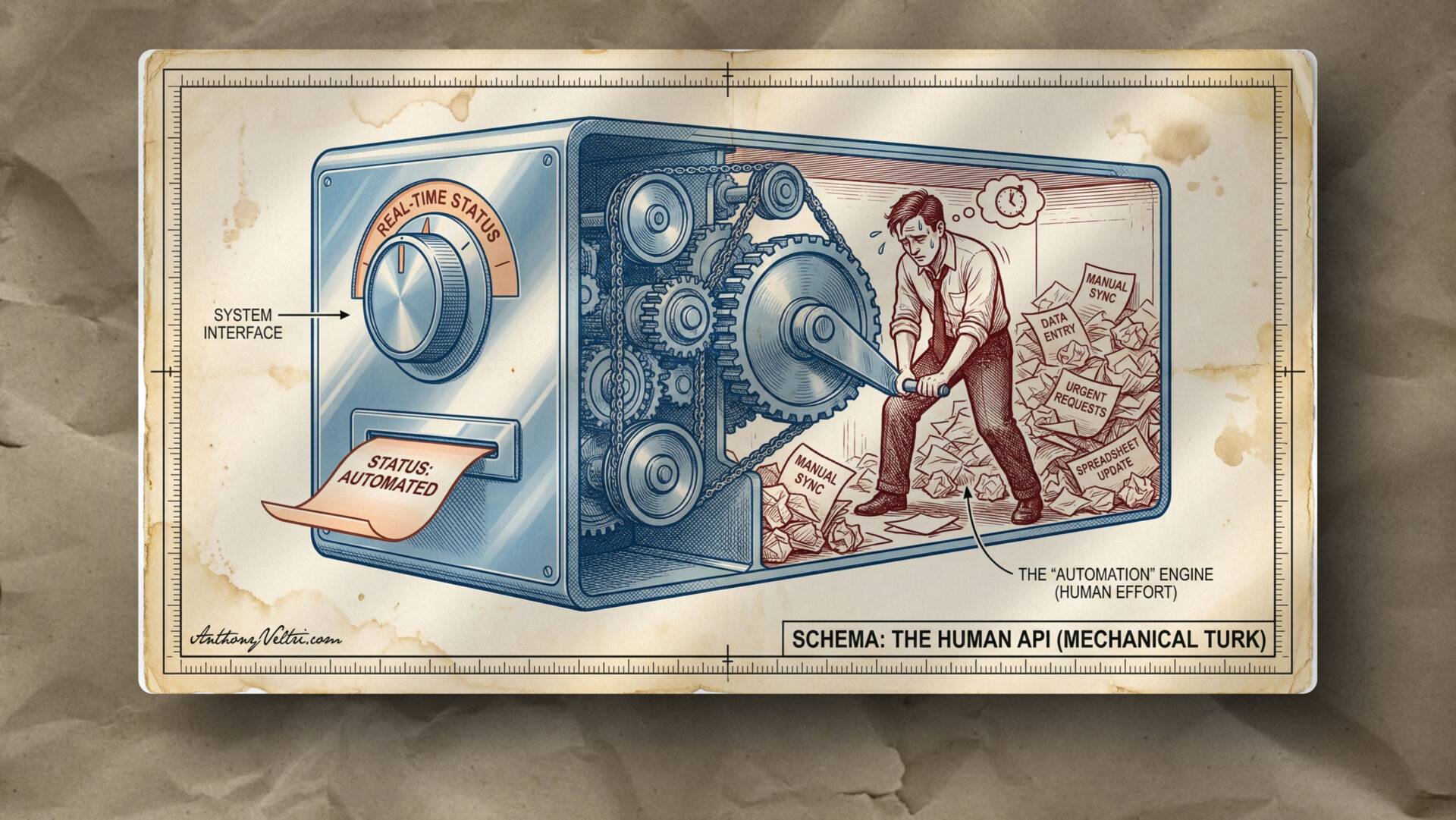

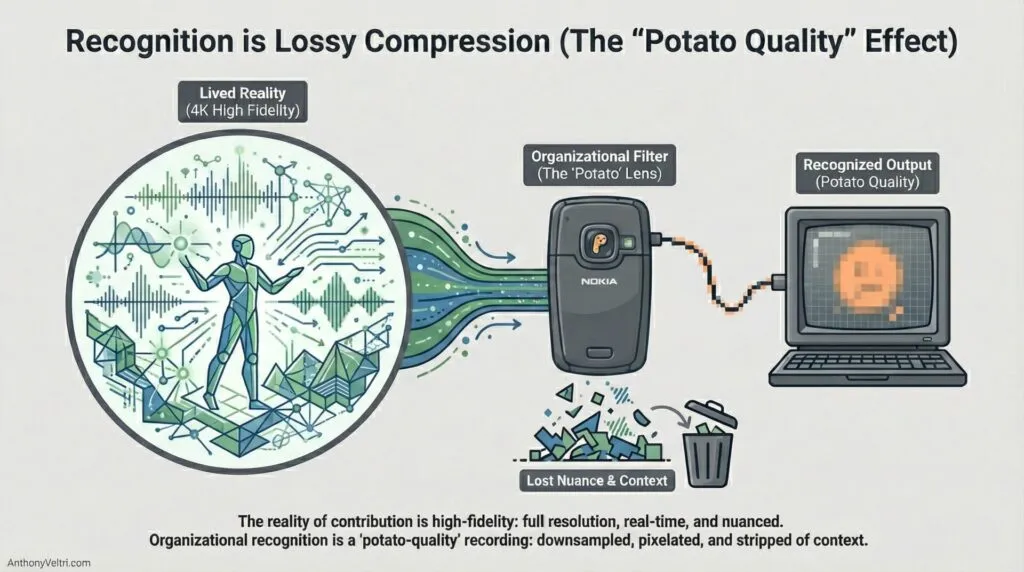

Recognition is a weak interface

Recognition is often late, constrained, and low resolution. It is a thin interface between real contribution and public understanding.

A ribbon cannot represent the years of judgment, initiative, and quiet reliability that made the work possible. But it can act like a citation. It makes the path partially legible to people who were not in the room.

This is the part that changed how I see systems.

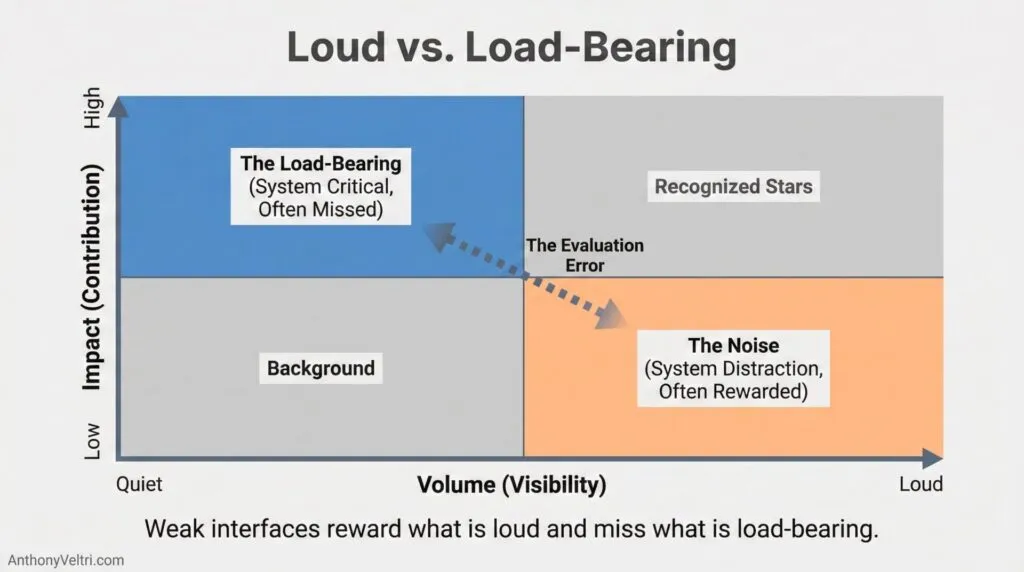

When recognition is weak, the system tends to reward what is loud and miss what is load-bearing.

The negative space of performance

To find the load-bearing work, you have to learn to see negative space.

Most systems are tuned to detect action: the crisis solved, the bug fixed, the late-night heroics. These are visible signals.

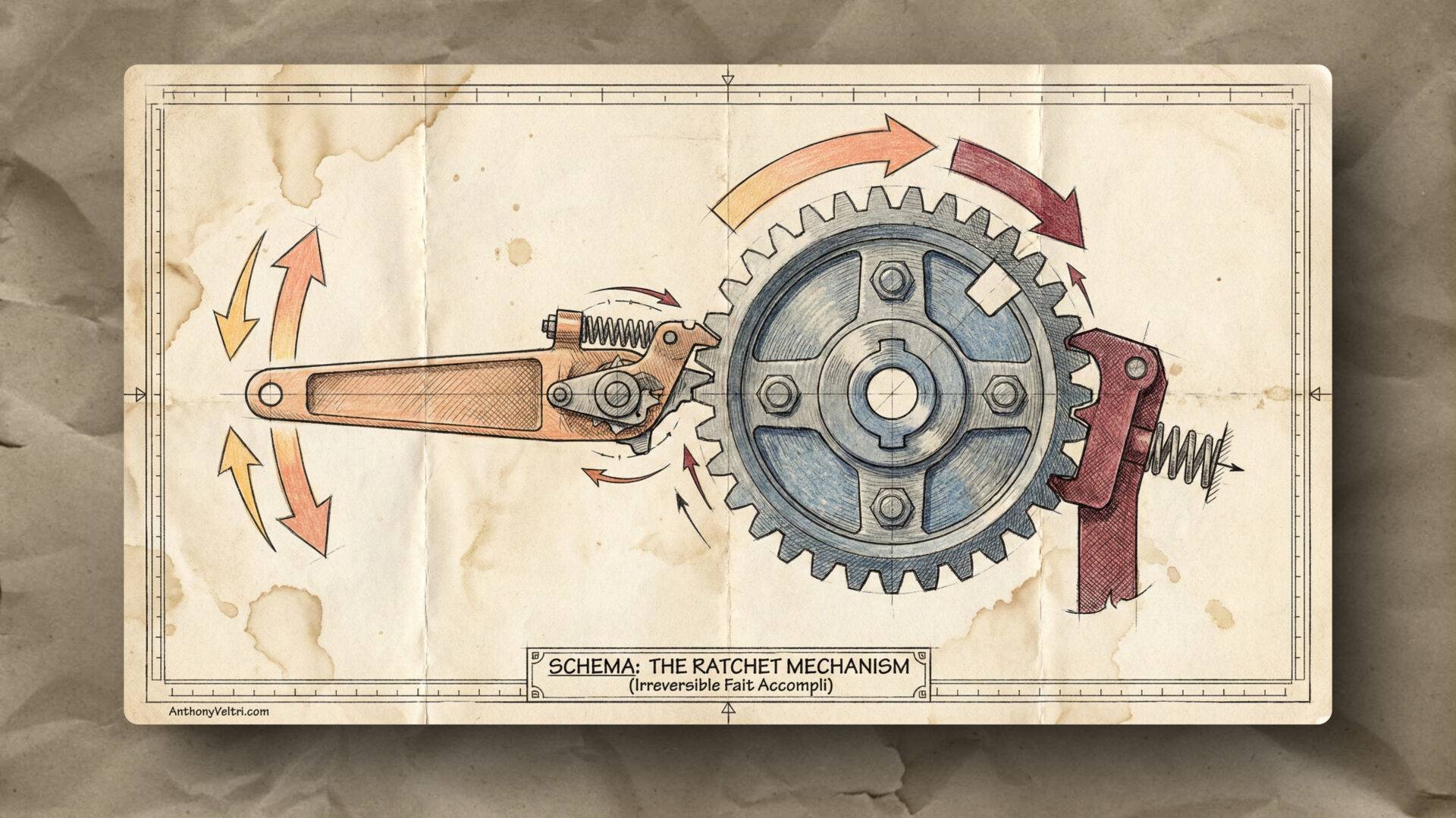

But reliability is defined by the absence of events.

You spot the quiet contributor not by what they are doing, but by what isn’t happening around them. The crisis that didn’t spark. The timeline that didn’t drift. The friction that didn’t grind the team down.

You are looking for the silence where there usually is chaos.

If you only measure the visible intervention, you will consistently undervalue the invisible prevention.

This cuts both ways

I used to think this only mattered in one direction.

On the way up: we under-credit quiet excellence because the signals are weak and our default assumptions (priors) are lazy.

On the way down: we over-blame people in bad circumstances because we cannot see the causal path that led there.

Only learning one direction is better than not noticing at all, but it is still incomplete. Proper evaluation requires symmetry.

A label is not a causal path. A token is not a full measurement. An outcome is not a moral verdict.

A practical test

When you see a label, a title, an award, or an outcome, ask:

- What am I using as evidence here?

- Predictive of what, specifically?

- What might be invisible that would change my evaluation?

- What evidence would update me, not just reassure me?

Where this connects to Doctrine

This is the same evaluation problem I wrote about in The Audition Trap: How Panel Interviews Miss The People You Need Most: a format can feel fair while still producing systematic false negatives.

It also maps directly to Doctrine 22: “When it depends” is the right answer, because priors and base rates shape how we interpret outcomes when the causal path is hidden.

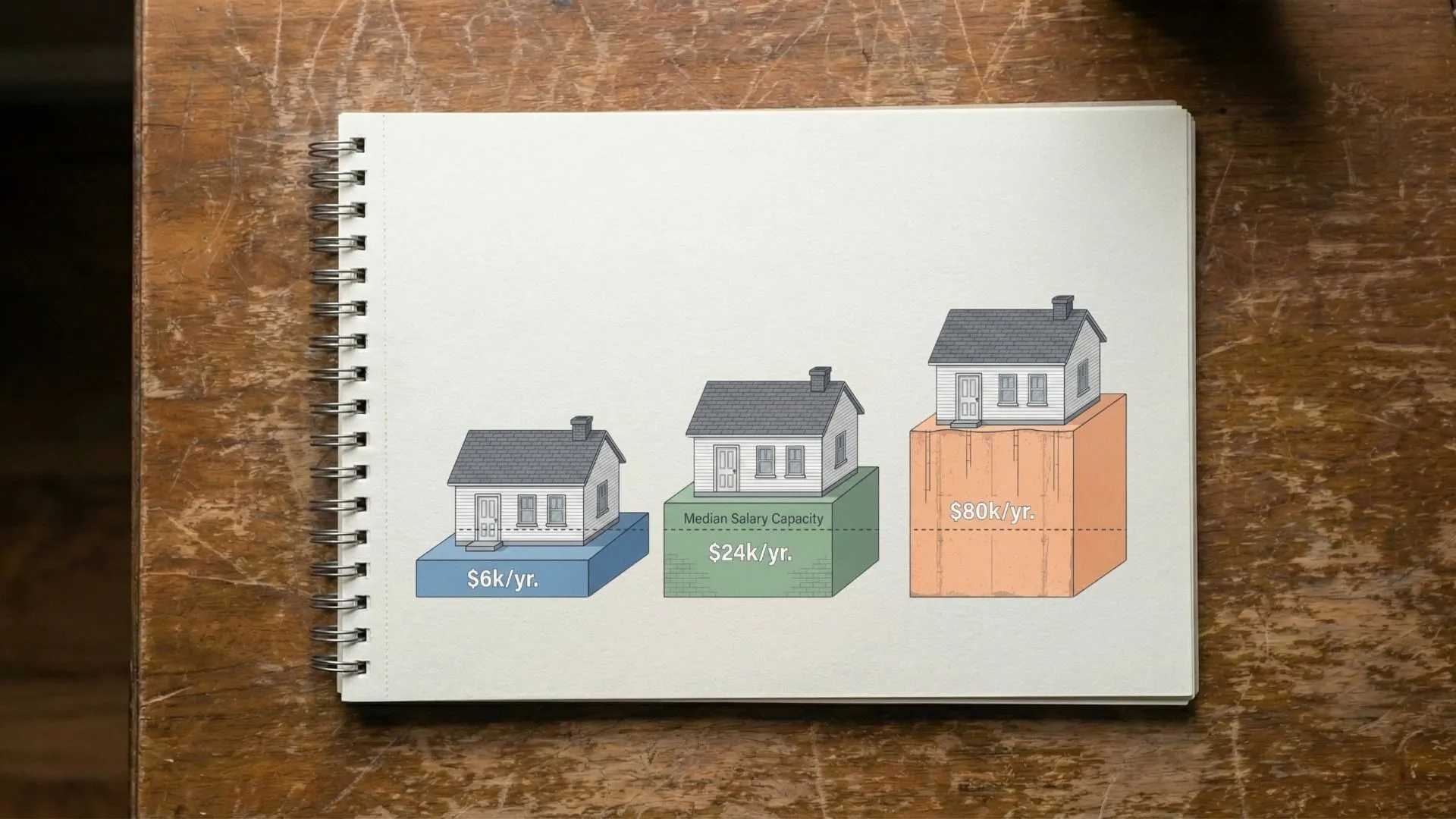

And it connects to runway thinking in College Financing and the 5-year home runway: slack changes whether bad luck becomes survivable or catastrophic.

Closing question

Where in your world are good people being under-credited because the interface for recognizing contribution is weak?

Last Updated on December 14, 2025